KEYNOTE PRESENTATIONS

1. Trisalyn Nelson - The Digital Twin: Untangling Spatial Pattern and Process

2. Bo Zhao - A glimpse into digital twins: The coming era of more-than-human GIScience.

3. Kelly Easterday - Implementing a Digital Twin for Conservation: Lessons and Applications from the Dangermond Preserve.

4. Shaowen Wang - Toward a Reproducible and Scalable Platform for Geospatial Digital Twins

5. Michael Batty - Defining Digital Twins: Multiple Models at Different Scales

6. Pascal Mueller - Digital Twin Technology & GIS

7. Clancy Wilmott - Title TBD

8. Maryann Feldman - Title TBD

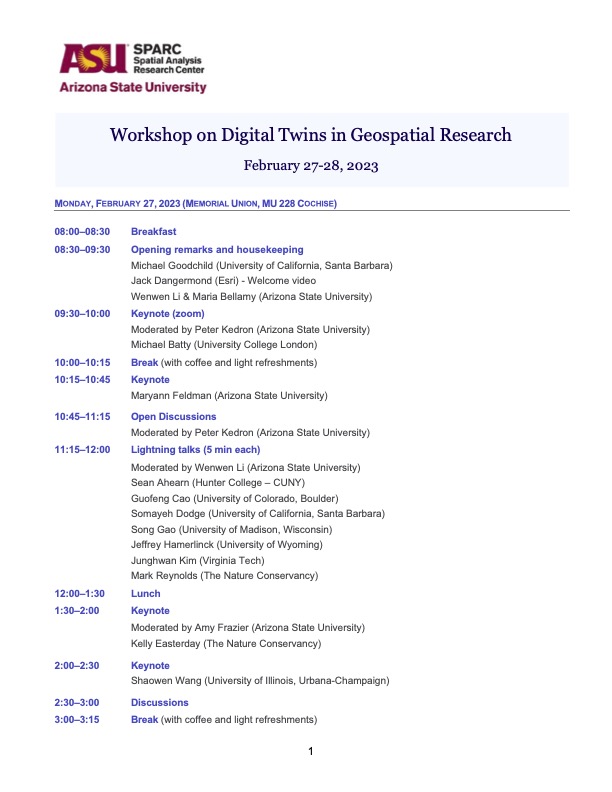

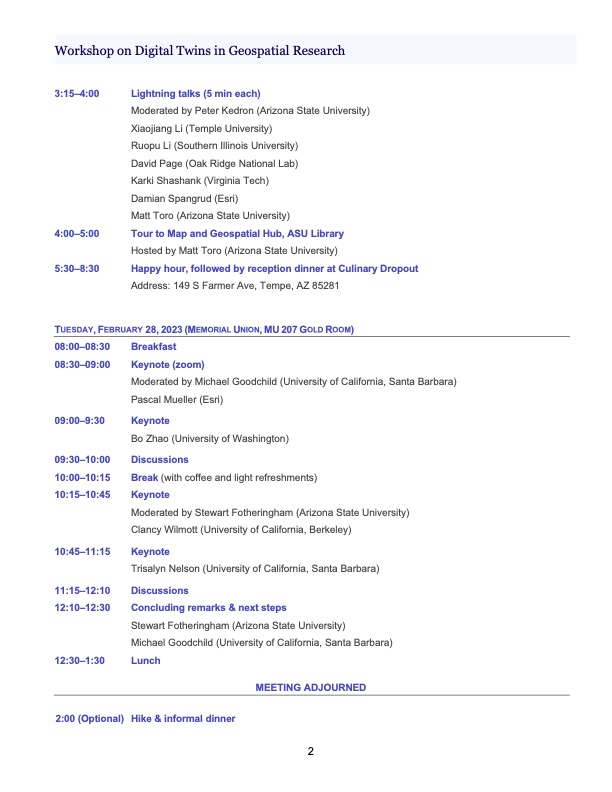

PROGRAM

A Specialist Meeting on Digital Twins

Under the auspices of the Arizona State University’s Spatial Analysis Research Center (SPARC) Version of 11/8/22

Background

A digital twin can be defined as “A virtual representation of the real world, including physical objects, processes, relationships, and behaviors” (Esri). As such it allows experiments to be carried out on the digital twin rather than on reality, and allows predictions to be made about the behavior of the real system without incurring the costs and side effects of implementing such intervention. Digital twins offer access to many types of data associated with a system and to the processes that govern the system’s dynamic behavior, and their emphasis on fine spatial resolution can support very compelling visualizations. Digital twins have been attracting attention in recent years in a host of fields ranging from manufacturing and urban planning to oceanography and ecology.

Digital twins overlap with GIS whenever the system to be twinned exists in the geographic domain and at geographic scales—that is, on or near the Earth’s surface and oceans and at resolutions from 1cm up to 10km. In this context Esri goes so far as to assert that “GIS is foundational for any digital twin”, and in the remainder of this discussion the GIS foundation will be assumed. The concept is clearly related to the Digital Earth that was the subject of a speech by Vice-President Gore in 1998 and has been the theme of much literature, a series of international conferences, an international journal, and a recent manual (Guo, Goodchild, and Annoni, 2020). However the Digital Earth as conceived by Gore and implemented in Google Earth falls somewhat short of the current vision of a digital twin in lacking representations of processes and thus the opportunity for interventions and predictions.

The need for a specialist meeting

Much of the energy behind digital twins derives from their value in prediction and assessment, and with some exceptions the academic community has paid them little attention to date. NASA recently held a highly over-subscribed workshop on digital twins, with strong participation from the private sector and NASA-supported research groups. The membership of the Digital Twin Consortium includes a handful of academic institutions (Monash University, University of Melbourne, University of Alaska at Anchorage, the University of Maryland’s Center for Environmental Energy Engineering, and the Universitat Politecnica Catalunya are currently listed as members), and other digital twin projects have roots and links in academia (such as the Gemini Project at the University of Cambridge, and the DITTO Project, which promotes the construction of digital twins of the oceans). Nevertheless it is time for a more comprehensive and dedicated academic look at the concept that is able to ask the kinds of questions that are characteristic of academia: questions about the reliability and validity of predictions from digital twins, about the deeper technical issues of digital twin design, about the ethical and societal implications of digital twins, and about educating and training the next generation of digital twin developers and users. The next subsections explore each of these five themes in turn.

Reliability and validity

As a representation a digital twin must necessarily be incomplete, since it is impossible to create a perfect digital representation of any part of reality and impossible therefore to create a perfect digital twin—“the map is not the territory” (Korszybski, 1933*). It follows that the representations in the digital

twin are subject to uncertainty, and that these uncertainties propagate through to its predictions and visualizations. In this respect digital twins are no different from any GIS, or for that matter from views of the world as obtained through the human eye. The problem has been dealt with most often by arguing fitness for purpose: that it is the use case that must determine the levels of uncertainty that can be accepted. For example, the spatial resolution of the data in a digital twin must be sufficiently fine to exclude any possibility that the desired predictions will be significantly impacted by real events, features, or relationships that are too small to have been captured. In short, there is an essential and unavoidable relationship between a digital twin and its associated use cases.

Much is now known about uncertainty in geospatial data as a result of more than three decades of research. However the use of digital twins and advances in geospatial data acquisition raise new issues, including:

Is there an ideal definition of a digital twin? The definition cited earlier focuses on representation, and yet it is most often visualization, as well as the ability of a digital twin to predict successfully in specific use cases, that attracts attention.

How can the uncertainties in the new data sources (e.g., social media sources, LiDAR acqusition of 3D city structure) being exploited in digital twins be measured, represented, modeled, and propagated through digital twin simulations?

What research is needed into the ways in which independent uncertainties from multiple data sources combine in the simulations of digital twins? Are there limits to the degree to which multiple data sources can be fused?

Are there ways of introducing uncertainty into the visualizations that can be used to understand and assess the usefulness and usability of the models?

How can users be alerted to the importance of uncertainty and the need to propagate it into digital twin predictions?

What are the consequences of ignoring uncertainty in working with digital twins? How does this uncertainty impact real-world decisions that might be made based on a digital twin?

Technical issues of digital twin design

As digital twins proliferate, increasing attention is being devoted to their interactions. The buildingSMART International organization has published a paper that addresses the concept of a digital twin ecosystem as a collaboration between interacting digital twins, and while the DITTO Project is actively advancing the notion of a digital twin for the oceans, it will clearly need to address relationships between the oceans and the terrestrial third of the globe, if only in coastal zones. Digital twins may coexist in the same geography, perhaps at different scales, raising more questions about the technology of interactions.

At a different level there is a need for standards for such topics as data models, maximum levels of uncertainty that are consistent with the term digital twin, terminology, and mechanisms to support search for digital twins. The following bullets raise a selection of questions that might prompt research on these topics:

Can digital twin designs be made extensible, to support changes in geographic areas of coverage, additional use cases, and refinement of spatial or temporal resolution?

What 3D and spatiotemporal data models are best suited to implementation in digital twins?

Is there a need for additional methods of data fusion and integration in support of digital twins?

What forms of search can be implemented to support discovery of digital twins? How can the

prediction capabilities and uncertainties of digital twins be discovered through search? Is there a need for standards of description, as extensions of existing metadata standards?

Ethical and societal issues

A recent white paper from the GeoEthics Project, “Locational Information and the Public Interest,” has drawn attention to the rapidly expanding set of ethical issues raised by the extensive use of geospatial technology. Many of these issues are directly relevant to digital twins, including the potential for surveillance and invasion of privacy and the potential for drawing inappropriate inferences from geospatial analytics. There is a need for reflection, not only on these issues and others in relation to digital twins, but also on the broader issues of digital twins for society. In this respect the research agenda should seek to advance the established literature on the societal impacts of GIS (e.g., Pickles, *). Following are some possible topics for further discussion:

Can digital twins be repurposed from their intended applications, and might such repurposing raise ethical issues? How might the user interface of digital twins be designed to minimize such possibilities?

When individuals are represented and modeled in a digital twin, how might their privacy be protected?

How should the results, predictions, and visualizations of a digital twin be presented while placing appropriate emphasis on uncertainty?

What rules and regulations currently govern the ownership of digital twins and their data and processes? Is there a need for additional regulation?

What criteria should a project be required to satisfy to merit the designation as a digital twin? Is there evidence that the term is being misused, or that false claims are being made about specific instances?

What levels of openness and transparency should be required of digital twins when used by a) public agencies, b) research projects, c) private consulting companies, or d) commercial software producers?

In what ways might the visualization functions of digital twins be engineered to mislead?

Education and training

The specialist meeting will also devote a portion of its time to developing an agenda for education and training. At this time there is a general lack of publications, textbooks, and teaching materials on digital twins, and little information is available to help an instructor to introduce digital-twin content into courses. This is similar to the situation facing GIS instruction in the 1980s, and it may be helpful to review the efforts made at that time in response. Following are some potential topics for discussion at the specialist meeting and in follow-on activities:

What principles underlie digital-twin technology that are likely to survive the next decade and might form the basis of an upper-division course?

How should future users and developers of digital twins be trained, on what existing software?

What example applications of digital twins might form a suitable gallery for a course? Have

some digital-twin projects failed, and might these failures be included in a course?

Should digital twins be taught as one or more separate courses, or should they be micro- inserted into existing courses?

What understanding of the principles of spatial uncertainty and geovisualization should be a) expected of enrollees in digital-twin courses, or b) taught as part of those courses?

The proposed format of the specialist meeting

We propose to hold the specialist meeting in Tempe, AZ in late February 2023. Participants would arrive on Sunday Feb 26 in time for an ice-breaker dinner. Sessions would fill Monday Feb 27 and the morning of Feb 28. Participants would form their own groups for dinner on Monday and join a workshop dinner on Tuesday. The afternoon of Feb 28 would provide an opportunity for further networking during a hike somewhere near Tempe.

We propose to invite four to six keynote speakers, arranging them in pairs in the first sessions on Monday morning, Monday afternoon, and Tuesday morning. Some keynotes would be presented remotely. Each participant would have the opportunity for a short lightning talk. There will also be time in the program for plenary discussions following the keynotes, and for breakout discussions on specific topics. The formal sessions will end with a wrapup and discussion of follow-on activities, which might include sessions at conferences, journal articles, one or more special issues of journals, and books.

In our view, the ideal size of the specialist meeting will be about 35 participants. Opportunities will be created for students to attend and to participate as note-takers. We will ensure that the mix of participants includes a diversity of disciplines, genders, ethnicities, and ages. Participants will be recruited through an extensive advertising of the specialist meeting. Applicants will be asked to provide a one-page resume and a one- or two-page statement of their personal interests in digital twins and in attending the specialist meeting.

References

Guo, H., M.F. Goodchild, and A. Annoni, editors (2020) Manual of Digital Earth. Singapore: Springer. DOI: 10.1007/978-981-32-9915-3.